-

Table of Contents

Unlock the power of intelligent decision-making with An Introduction to Reinforcement Learning.

Introduction

Reinforcement learning is a subfield of machine learning that focuses on training agents to make sequential decisions in an environment to maximize a cumulative reward. It is inspired by how humans and animals learn through trial and error. In reinforcement learning, an agent interacts with an environment, takes actions, and receives feedback in the form of rewards or penalties. Through this iterative process, the agent learns to optimize its decision-making strategy to achieve long-term goals. Reinforcement learning has been successfully applied to various domains, including robotics, game playing, and autonomous systems. This introduction provides a brief overview of the fundamental concepts and techniques used in reinforcement learning.

The Basics of Reinforcement Learning: An Introduction

Reinforcement learning is a branch of artificial intelligence that focuses on training agents to make decisions based on trial and error. It is a powerful tool that has been used to solve complex problems in various fields, including robotics, game playing, and autonomous driving. In this article, we will provide an introduction to the basics of reinforcement learning, explaining its key concepts and how it works.

At its core, reinforcement learning is about learning from interactions with an environment. The agent, which can be a computer program or a robot, takes actions in the environment and receives feedback in the form of rewards or punishments. The goal of the agent is to maximize its cumulative reward over time by learning which actions lead to positive outcomes and which ones should be avoided.

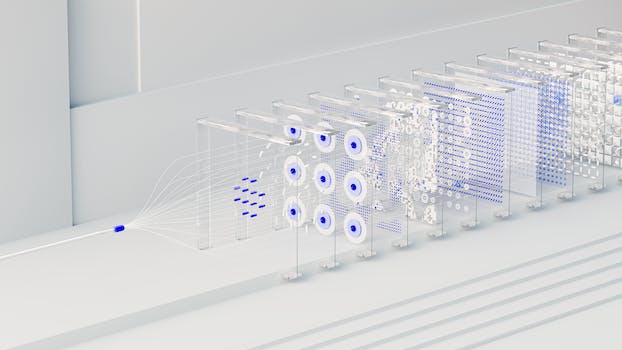

To achieve this, reinforcement learning relies on the concept of a Markov Decision Process (MDP). An MDP is a mathematical framework that models the interaction between an agent and an environment. It consists of a set of states, actions, transition probabilities, and rewards. The agent's goal is to find an optimal policy, which is a mapping from states to actions that maximizes the expected cumulative reward.

The agent learns the optimal policy through a process called trial and error. It starts with no prior knowledge and explores the environment by taking random actions. As it interacts with the environment, it collects data about the states, actions, and rewards. This data is then used to update the agent's knowledge and improve its decision-making abilities.

One of the key challenges in reinforcement learning is the exploration-exploitation trade-off. Exploration refers to the agent's desire to try out new actions and gather more information about the environment. Exploitation, on the other hand, refers to the agent's desire to exploit its current knowledge and take actions that are likely to lead to high rewards. Striking the right balance between exploration and exploitation is crucial for the agent to learn an optimal policy.

To solve an MDP, reinforcement learning algorithms use a value function or a Q-function. The value function estimates the expected cumulative reward starting from a given state and following a particular policy. The Q-function, on the other hand, estimates the expected cumulative reward starting from a given state, taking a particular action, and following a particular policy. These functions are updated iteratively based on the agent's experience, using techniques such as temporal difference learning or Monte Carlo methods.

Reinforcement learning algorithms can be categorized into two main types: model-based and model-free. Model-based algorithms learn a model of the environment, including the transition probabilities and rewards, and use this model to plan their actions. Model-free algorithms, on the other hand, directly learn the optimal policy without explicitly modeling the environment.

In recent years, reinforcement learning has achieved remarkable successes in various domains. For example, AlphaGo, a reinforcement learning-based system developed by DeepMind, defeated the world champion Go player in 2016. Reinforcement learning has also been used to train robots to perform complex tasks, such as grasping objects or navigating through cluttered environments.

In conclusion, reinforcement learning is a powerful approach to training agents to make decisions based on trial and error. It relies on the concept of a Markov Decision Process and uses value functions or Q-functions to estimate the expected cumulative reward. By striking the right balance between exploration and exploitation, reinforcement learning algorithms can learn optimal policies and solve complex problems in various domains.

Applications of Reinforcement Learning in Real-World Scenarios

Reinforcement learning is a branch of artificial intelligence that focuses on training agents to make decisions based on trial and error. It is a powerful tool that has found applications in various real-world scenarios, ranging from robotics to finance. In this article, we will explore some of the most notable applications of reinforcement learning and how it has revolutionized these fields.

One of the most prominent applications of reinforcement learning is in robotics. By using reinforcement learning algorithms, robots can learn to perform complex tasks without explicit programming. For example, in the field of autonomous driving, reinforcement learning has been used to train self-driving cars to navigate through traffic and make decisions in real-time. This has the potential to greatly improve road safety and efficiency.

Another area where reinforcement learning has made significant contributions is in healthcare. By using reinforcement learning algorithms, doctors can optimize treatment plans for patients. For instance, in cancer treatment, reinforcement learning can be used to determine the optimal dosage and timing of chemotherapy drugs, taking into account the patient's response to previous treatments. This personalized approach can lead to better outcomes and improved patient care.

Reinforcement learning has also found applications in finance. By using reinforcement learning algorithms, traders can develop strategies for making investment decisions. These algorithms can analyze vast amounts of financial data and learn from past market trends to make predictions about future market movements. This can help traders make more informed decisions and potentially increase their profits.

In the field of energy management, reinforcement learning has been used to optimize the operation of power grids. By using reinforcement learning algorithms, power grid operators can determine the most efficient way to distribute electricity, taking into account factors such as demand, supply, and environmental constraints. This can lead to a more reliable and sustainable energy system.

Another interesting application of reinforcement learning is in the field of gaming. Reinforcement learning algorithms have been used to train agents to play complex games such as chess, Go, and poker. By playing against themselves or other agents, these algorithms can learn optimal strategies and become highly skilled players. This has led to breakthroughs in game theory and has pushed the boundaries of what is possible in the world of gaming.

In conclusion, reinforcement learning has revolutionized various real-world scenarios by enabling agents to learn from trial and error. From robotics to finance, healthcare to energy management, and even gaming, reinforcement learning has found applications in diverse fields. By using reinforcement learning algorithms, we can optimize decision-making processes, improve outcomes, and push the boundaries of what is possible. As technology continues to advance, we can expect to see even more exciting applications of reinforcement learning in the future.

Exploring Reinforcement Learning Algorithms and Techniques

Reinforcement learning is a branch of machine learning that focuses on training agents to make decisions in an environment to maximize a reward. It is a powerful technique that has gained significant attention in recent years due to its ability to solve complex problems without explicit instructions. In this section, we will explore some of the most popular reinforcement learning algorithms and techniques.

One of the fundamental concepts in reinforcement learning is the Markov Decision Process (MDP). An MDP is a mathematical framework that models the interaction between an agent and an environment. It consists of a set of states, actions, transition probabilities, and rewards. The agent's goal is to learn a policy, which is a mapping from states to actions, that maximizes the expected cumulative reward.

One of the simplest reinforcement learning algorithms is the Q-learning algorithm. Q-learning is a model-free algorithm, meaning that it does not require knowledge of the transition probabilities. Instead, it learns an action-value function, called Q-function, that estimates the expected cumulative reward for taking a particular action in a given state. The Q-function is updated iteratively based on the observed rewards and the agent's actions.

Another popular algorithm is the Deep Q-Network (DQN). DQN combines Q-learning with deep neural networks to handle high-dimensional state spaces. Deep neural networks are used to approximate the Q-function, allowing the agent to learn from raw sensory inputs, such as images. DQN has achieved impressive results in various domains, including playing Atari games and controlling robotic systems.

Policy gradient methods are another class of reinforcement learning algorithms. Unlike value-based methods like Q-learning, policy gradient methods directly optimize the policy. They learn a parameterized policy that maps states to actions, and the parameters are updated using gradient ascent to maximize the expected cumulative reward. Policy gradient methods have been successful in tasks with continuous action spaces, such as robotic control.

In addition to these algorithms, there are several techniques that can enhance the performance of reinforcement learning agents. One such technique is exploration-exploitation trade-off. Exploration refers to the agent's ability to try out different actions to discover new strategies, while exploitation refers to the agent's tendency to choose actions that have been successful in the past. Striking the right balance between exploration and exploitation is crucial for effective learning.

Another technique is reward shaping, which involves designing additional reward functions to guide the agent's learning process. Reward shaping can help the agent to learn faster and achieve better performance by providing additional feedback signals. However, designing appropriate reward functions can be challenging and requires domain knowledge.

Finally, transfer learning is a technique that allows an agent to leverage knowledge learned in one task to improve performance in another related task. Transfer learning can significantly speed up the learning process and enable agents to generalize across different environments.

In conclusion, reinforcement learning is a powerful approach to train agents to make decisions in complex environments. It offers a range of algorithms and techniques that can be applied to various domains. From simple Q-learning to advanced deep reinforcement learning methods, there are numerous options to choose from. By understanding these algorithms and techniques, researchers and practitioners can harness the full potential of reinforcement learning to solve real-world problems.

Q&A

1. What is reinforcement learning?

Reinforcement learning is a type of machine learning where an agent learns to make decisions by interacting with an environment. It involves learning from feedback in the form of rewards or punishments to maximize a cumulative reward over time.

2. How does reinforcement learning work?

In reinforcement learning, an agent takes actions in an environment and receives feedback in the form of rewards or penalties. The agent's goal is to learn a policy that maximizes the expected cumulative reward. This is achieved through trial and error, where the agent explores different actions and learns from the outcomes to improve its decision-making abilities.

3. What are some applications of reinforcement learning?

Reinforcement learning has various applications, including robotics, game playing, autonomous vehicles, recommendation systems, and resource management. It has been used to train robots to perform complex tasks, teach computers to play games like chess and Go at a high level, and optimize resource allocation in industries such as energy and transportation.

Conclusion

In conclusion, "An Introduction to Reinforcement Learning" provides a comprehensive overview of the field of reinforcement learning. It covers the fundamental concepts, algorithms, and applications of reinforcement learning, making it a valuable resource for both beginners and experienced practitioners. The book explores various topics such as Markov decision processes, value functions, policy optimization, and exploration-exploitation trade-offs. It also discusses the challenges and limitations of reinforcement learning and provides insights into future research directions. Overall, "An Introduction to Reinforcement Learning" is a highly informative and accessible guide for anyone interested in understanding and applying reinforcement learning techniques.